Monitoring Microsoft Distributed File Systems

eG Enterprise provides a specialized monitoring model for monitoring Microsoft DFS. This enables administrators to periodically run health checks on DFS and ensure that the DFS-managed files/folders are available and accessible at all times.

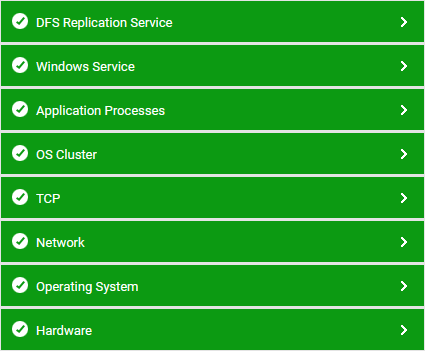

Figure 1 : Layer model of the Microsoft DFS

Each layer of this model is mapped to tests that check the following at configured intervals:

-

Are referral requests being processed quickly? Which DFS namespace is the slowest in responding to referral requests?

-

How effective is the compression algorithm used by the DFS replication service? Is it saving bandwidth when performing replication? When replicating which folder was bandwidth saving the lowest? Are any replication connections consuming bandwidth excessively?

-

Are the staging and Conflict and Deleted folders sized adequately for all replication folders?

-

Is the quota configuration of the staging and conflict and deleted folders right?

-

Is any replication folder experiencing replication bottlenecks? Which one?

-

How is the API request load on the namespace server? Is the server able to handle the load?

These metrics shed light on the following:

- A potential slowdown when accessing a namespace on the namespace server;

- Sizing inadequacies of the namespace server

- Bottlenecks in replication and the replication folders they affect;

- Impact of replication on bandwidth usage;

- How quota configurations affect the speed and efficiency of replication;

The sections that follow elaborate on the DFS Replication Service layer alone, as the other layers have already been discussed in the Monitoring Unix and Windows Servers document.